The Digital Innovation Lab seeks to create best practices for handling big data. We are exploring automation and crowd-sourcing processes for harvesting data sets such as maps and city directories.

Harvesting City Directories

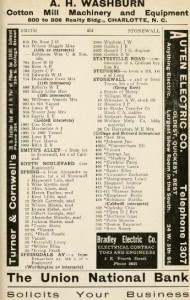

City directories, similar to today’s phone books, recorded a listing of residents and businesses in a town or grouping of towns. But these directories provided more than a simple list of names. Occupations and often familial status were included. Many directories also contained separate street directories, where residents were listed a second time by street. These street directories offered a block by block listing of residents, enabling the modern reader to imagine what it might be like to walk around the town’s neighborhoods and business districts.

While northern directories may have excluded non-white residents, southern publishers decidedly did not. These publishers recreated and reaffirmed Jim Crow segregation in their directories by demarcating race, either by segregating non-whites into separate listings, or by marking them as non-white with some sort of symbol, a * or “c” for instance. While this practice is incredibly cruel by today’s standards, the resulting directories nonetheless provide historians and demographers with a wealth of information.

There are many challenges to extracting the content from digitized city directories into usable output. For one, the optical character recognition (OCR) performed on the directories is limited; often the software cannot detect * or “c”, which are both critical indicators of race. Secondly, the OCR text output is typically a single text or XML file, which is not easily searchable. Finally, there is a lot of “noise” on a typical directory page; namely irrelevant information such as advertisements and headers. Advertisements can appear as print ads, or as text running vertically along the side of a page. There is rarely any consistency in their placement even within a single directory. On its own, a machine cannot discern relevant from “noisy” information, nor can it detect subtle nuances found in an inconsistent and unpredictable historical collection.

During the 2011-2012 academic year, the DIL created a parser to extract all of the entries from the general alphabetical listing of residents of Charlotte, NC in 1911. The entries were transformed into database fields, thereby facilitating search, enabling mapping and other visualization analytics.

Moving forward, we will work to extend our work harvesting one directory to be able to handle all North Carolina digitized directories. Crowd-sourcing will be employed to fill in gaps left by the parser and verify parsing accuracy. Once successful, we will generalize out to the national level, with the eventual intention of creating an interface for users to harvest city directories on their own.