We’re hard at work to finish diPH beta by the end of December, and along with that, our pilot project — Visualizing the Long Women’s Movement. The project has provided many opportunities to refine our thinking about how the plugin will work, and how data might be structured within a typical diPH project (more on that to come in a future update).

The oral history pilot project has led us in some interesting and unexpected directions. Most notably, it has pushed us to think about how to connect audio and text to a visualization. We wanted to annotate (“tag”) oral history transcripts to create multiple points of entry and exploration into an oral history. But we also wanted users to interact with the actual audio (whereas in a more traditional setting, the primary interaction is typically with the text transcript; often researchers never hear the original audio). We were also concerned about splicing the audio into stand-alone pieces because we didn’t want audio clips taken out of context.

These requirements led us to a solution that allows us to preserve the entire audio file while creating meaningful points of entry into a set of interviews – by linking the audio file to the text transcript.

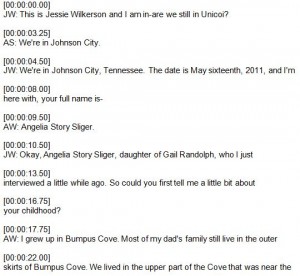

We ran each of the edited transcripts through Docsoft:AV, a Dragon-based audio mining software tool. DocSoftAV creates a time-stamped text file of the transcript, with regular intervals at every few seconds (depending on the rhythm of the speaker). This process transforms conventional transcripts into segmented transcripts, which will allow diPH users to jump around in the audio and the transcript simultaneously.

We applied the timestamps (start and end times) to pre-selected content that the SOHP had already tagged. Once the front-end visualizations are completed, users will be able to click on data points (markers on a map, for instance) and listen to and read along with a segment of the total interview. Though a specific data point will have a specific segment of the interview associated with it, users will be able to move beyond that segment at any time. They can go back to the beginning, jump forward, or repeat sections. And, of course, users may listen to the entire audio file – with accompanying text rolling by similar to a karaoke machine – from start to finish, as this crude demo shows:

[vimeo]http://vimeo.com/54795851[/vimeo]

We will create visual cues inside the transcript to indicate whether a particular section of the text has already been tagged (perhaps with an icon or different color text). Eventually, users will be able to highlight a word or set of words in order to annotate/tag the interview themselves. That is, users will be able to create their own data points within the project. We are also exploring the possibility of enabling users to edit the transcript via a wiki-styled edit tool.

Ultimately, the audio/transcript piece of diPH will be integrated fully into projects, so that they are not separate from other entry points. In short, they will be embedded with other visualizations, so that users will be able to see, hear, and read the project’s content.

Look for more information about this project (coming soon), and stay tuned to our project blog to find out when you can start playing with diPH!